The Facebook Files, an article series by the Wall Street Journal, casts a damning light on the internal workings of the social media giant. Favoritism and shielding of higher-profile accounts from guideline enforcements, questionable algorithms and toxic environments for teenagers on its Instagram platform are just a few of the allegations leveled towards Mark Zuckerberg's company. Nevertheless, many U.S. residents still trust in Facebook when it comes to news consumption.

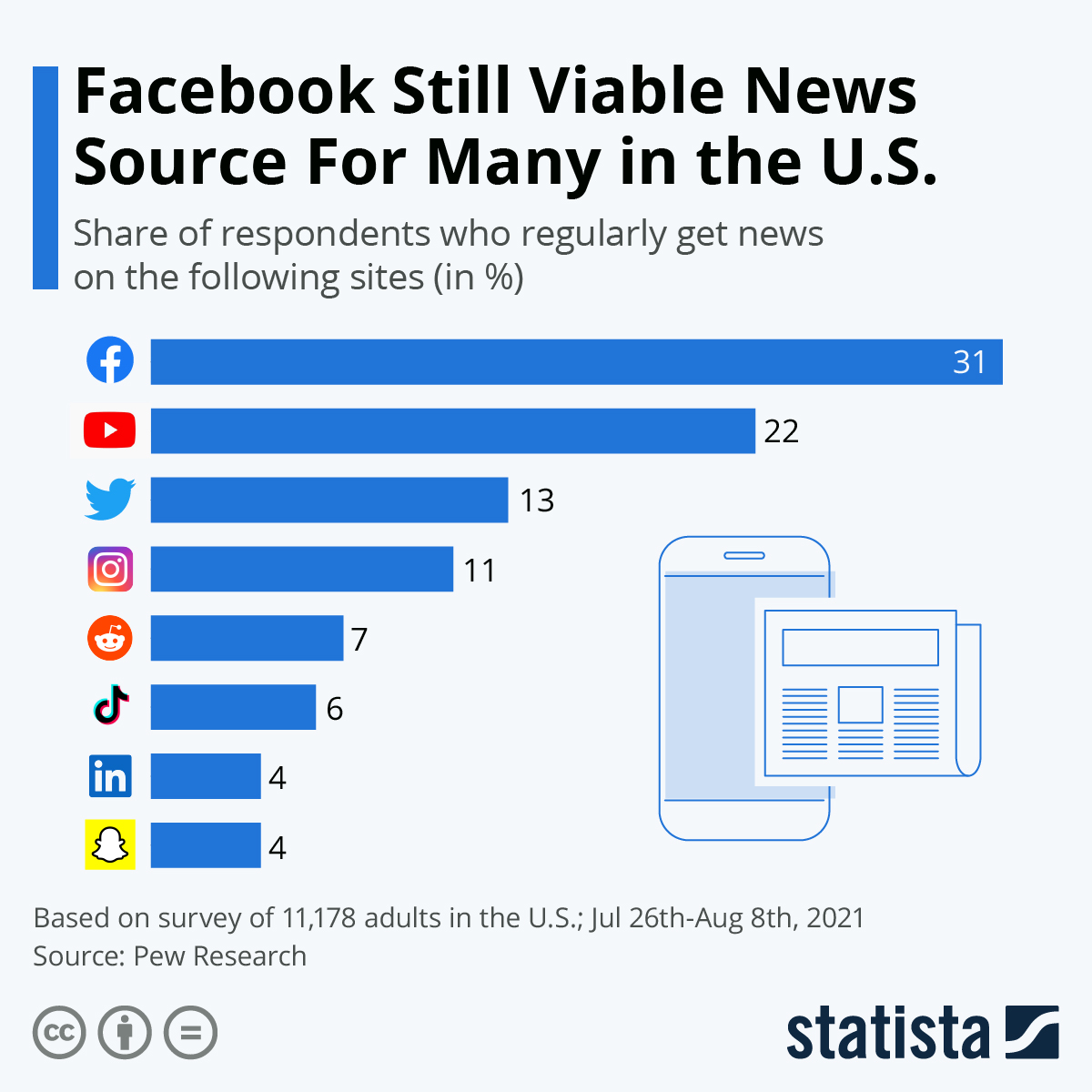

As data from Pew Research shows, 31 percent of respondents still regularly get news from Facebook. While this number is down from the previous year's survey's 36 percent, it's still far greater than the figures for its competitors. 22 percent said they regularly go to YouTube to get their news fix, while only 13 percent use Twitter in that regard. What the survey fails to portray are the sources of the news its respondents consume on social media channels. Since this could mean both browsing channels of vetted publications and random Facebook groups, those numbers should be taken with a grain of salt.

In order to underline its efforts combating the spread of misinformation, graphic content and other breaches of its community standards, Facebook has created a Transparency Center detailing actions taken against posts not conforming to its guidelines. For example, according to its Community Standards Enforcement Report covering January to April 2021, the tech giant claimed it had removed 18 million posts containing misinformation on the coronavirus on Instagram and Facebook. Although commendable, this is most likely just a proverbial drop in the bucket with COVID-19 being the dominating topic in online conversations over the past 18 months.