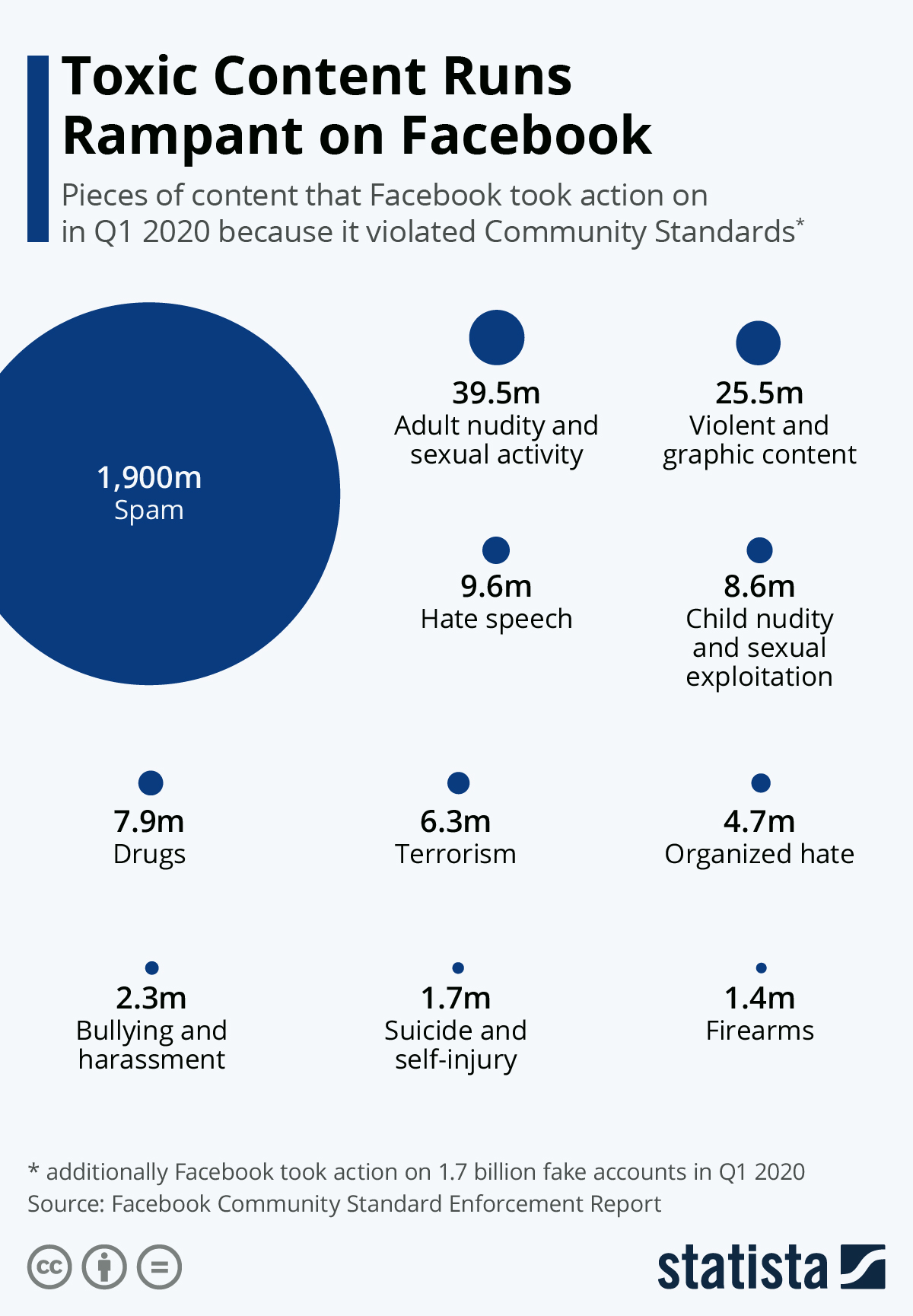

Facebook has published new figures showing the amount of controversial content it took action on (i.e. removed or flagged) in the first three months of 2020. Amid the spreading of fake news, conspiracy theories and increasing levels of inflammatory content circulating online, the social network, along with other online platforms, has come under pressure lately to better regulate what's happening on its watch. The content that Facebook is actively trying to keep off its site can be broken down into nine categories: graphic violence, adult nudity and sexual activity, dangerous organizations (terrorism and organized hate), hate speech, bullying and harassment, child nudity and sexual exploitation, suicide and self-injury, regulated goods (drugs and firearms) and, last but definitely not least, spam.

In Q1 2020, 1.9 billion posts categorized as spam were removed from Facebook, accounting for roughly 95 percent of all content taken action on (excluding fake accounts). 25.5 million posts containing violent and graphic content were also taken down or covered with a warning, 99 percent of which were found and flagged by Facebook's technology before they were reported. Likewise, 99 percent of all posts taken down or flagged for containing adult nudity or sexual activity were pinpointed and identified automatically before they were reported - 39.5 million such posts were given warning labels or deleted in total.

Albeit improved, Facebook's technology is still less successful at identifying posts containing hate speech. Of the 9.6 million pieces of content the company took action against for including hate speech only 89 percent were flagged by Facebook before users reported a violation of the platform's Community Standards. When it comes to spam, the content most frequently deleted, disabling fake accounts is critical. During the third quarter, 1.7 billion fake accounts were disabled and most of them were removed within minutes of registration.

While It's good to see Facebook stepping up its game in terms of keeping toxic content off its platform, doing so has come with its own set of problems. Earlier this week, the company announced a $52 million settlement in a class action suit from content moderators, who suffered from post traumatic stress disorder after being exposed to the graphic and disturbing content they were hired to review.